Counting Sort | Pseudo Code | Example | Analyzing Complexity

Till now we have introduced insertion sort, merge sort, heap sort and quick sort. Out of which we know that merge sort and heap sort has a worst case of O(n*lgn) and quick sort has an average case of O(n*lgn).

Till now all the above algorithms mentioned use comparison as a medium to arrange elements in ascending or descending order. Now we are going to work on a very interesting algorithm which does not uses comparison as a medium to sort the numbers. It’s called counting sort. For a n sequence of numbers it runs in omega(n*lgn) time. Counting sort sorts the element in linear time. In this blog post we are going to- Write a pseudocode for counting sort

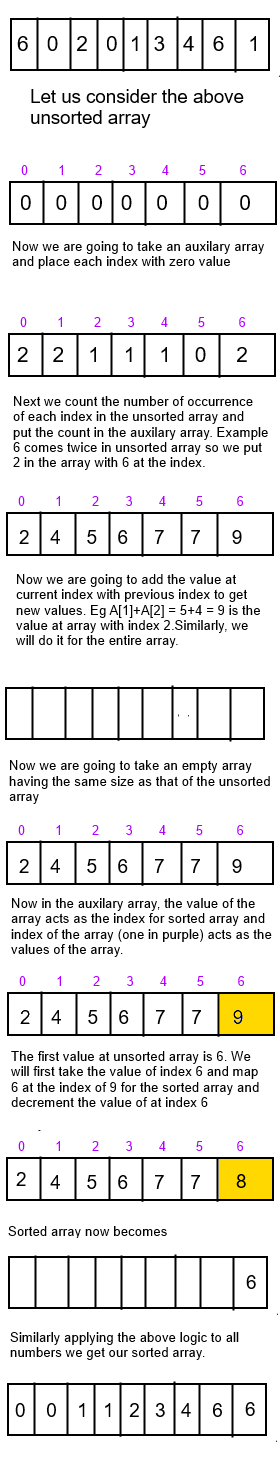

- Explain it via example

- Analyze the complexity of the algorithm and write a c++ program for the same.

As shown in the above example we have an auxiliary array C and initially place all the value of C as 0. Next we take the elements of unsorted array A and use it as indexes for auxiliary array. Then we add the values at current position and next position of the array and use it as the index for sorted array to get our sorted array as shown in above example.

Analyzing counting sort

We have used 4 for loops however since they are used in parallel. At worst the loop is parsed through A.length which gives sorting time as θ (n) where n is A.length.

An important property of counting sort is that it is stable. Numbers with the same value appears in the output array as it appears in the input array. Normally the property of stability is important only when data are carried around with the element being sorted. Such property proves that counting sort is stable.

That’s all folks! We have seen an algorithm which sorts data in linear time only when the elements of the array are positive.

As shown in the above example we have an auxiliary array C and initially place all the value of C as 0. Next we take the elements of unsorted array A and use it as indexes for auxiliary array. Then we add the values at current position and next position of the array and use it as the index for sorted array to get our sorted array as shown in above example.

Analyzing counting sort

We have used 4 for loops however since they are used in parallel. At worst the loop is parsed through A.length which gives sorting time as θ (n) where n is A.length.

An important property of counting sort is that it is stable. Numbers with the same value appears in the output array as it appears in the input array. Normally the property of stability is important only when data are carried around with the element being sorted. Such property proves that counting sort is stable.

That’s all folks! We have seen an algorithm which sorts data in linear time only when the elements of the array are positive.

Never miss a story from us, subscribe to our newsletter

Never miss a story from us, subscribe to our newsletter